Ray Reconstruction: The new feature brought by DLSS 3.5

In this article, we’ll take a look at some of the exclusive technologies that Nvidia GeForce RTX 4000 generation graphics cards can provide. We are going to explain the most significant new features currently supported by the GeForce graphics card ecosystem and perform tests showing how they affect performance in Cyberpunk 2077 with the new Phantom Liberty expansion. And we’ll also take a look at what they’re doing to image quality.

DLSS 3.5: Ray Reconstruction

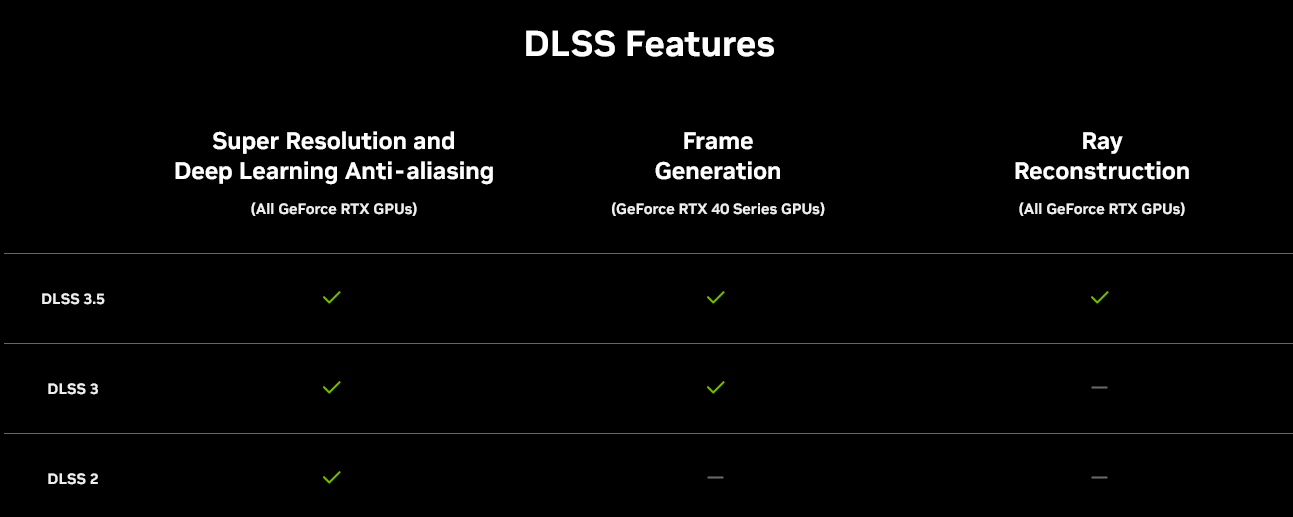

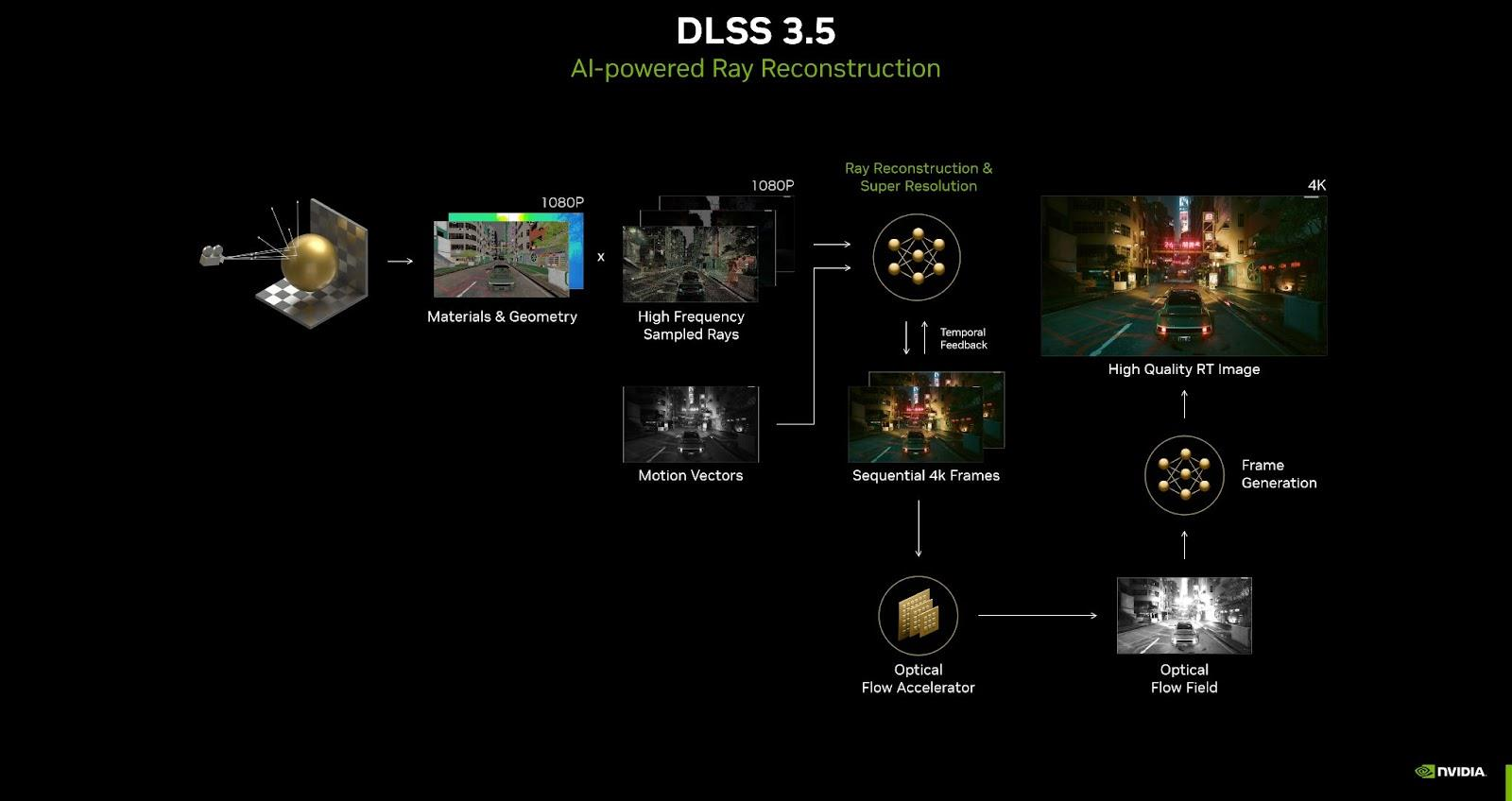

The latest exclusive technology that Nvidia has brought to GeForce RTX graphics cards users is Ray Reconstruction, which first appeared with DLSS 3.5 recently. It should be said that DLSS 3.5 also incorporates DLSS 2.x and the aforementioned Frame Generation, which was new in DLSS 3. However, games using DLSS 3.5 do not necessarily use Frame Generation. While that feature is limited to GeForce RTX 4000 graphics cards due to the use of Optical Flow Accelerators, other DLSS 3.5 components can also be taken advantage off by users of previous generations of GeForce RTX 3000 and RTX 2000 – and that includes the new Ray Reconstruction feature, which works o Turing and Ampere GPUs.

Ray Reconstruction is a new feature that affects both upscaling/super resolution in the sense introduced by DLSS 2.x, and at the same time raytracing effects (i.e. “RTX” or “DXR”) in games. The benefit of this technique should be an improvement in quality, but as a side-effect there is also a possible improvement in performance.

Denoising within ray tracing

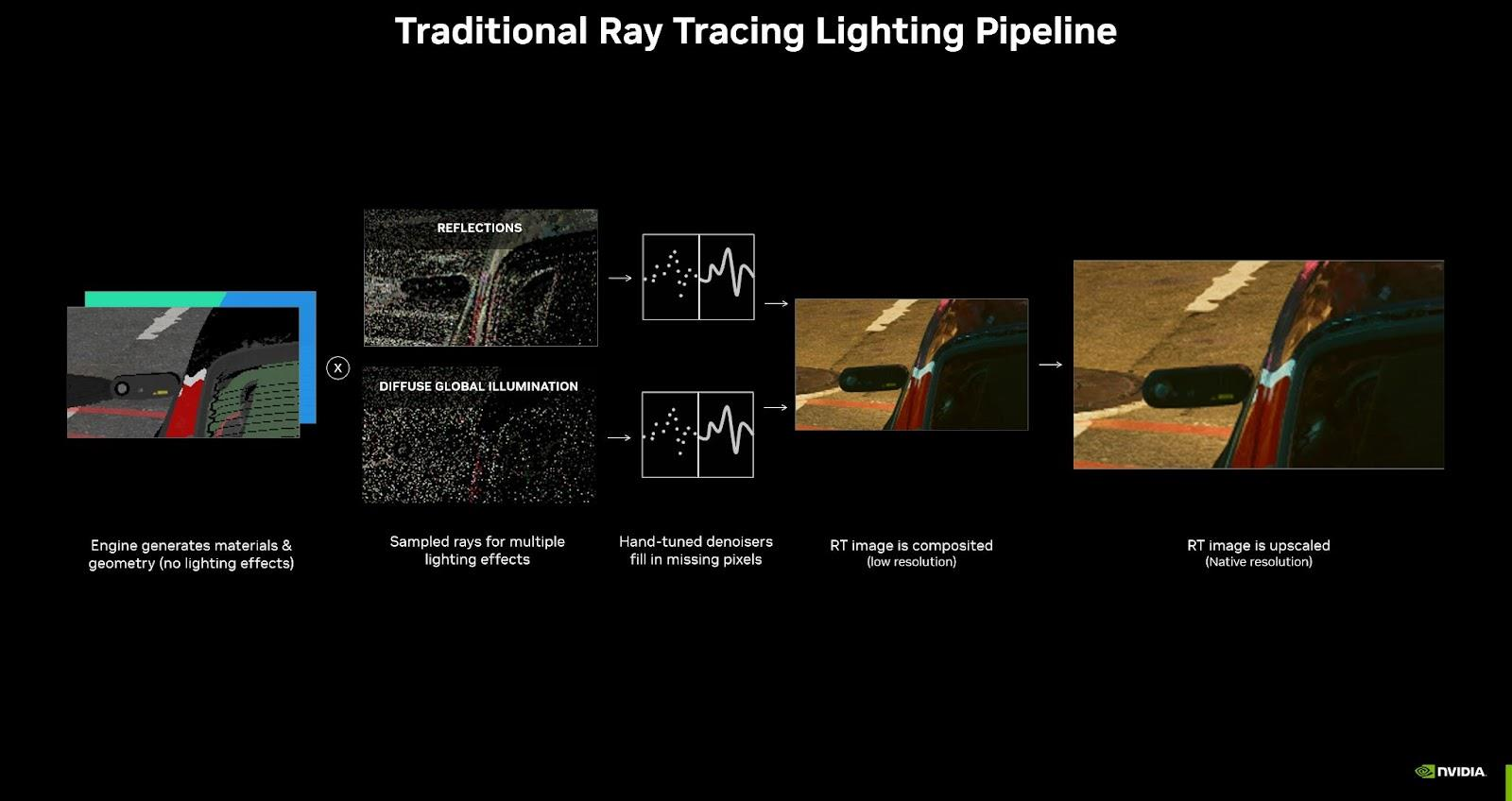

As you probably know, for rendering a scene by ray tracing, it is necessary to analyze a large number of light rays impacting and reflecting from objects. The problem in games is that there is not enough performance to calculate as many rays as would be needed. Therefore, only a relatively small number of them are analyzed. You can imagine that instead of a neat complete final picture, you end up with a snapshot that doesn’t provide with a continuous image, but instead only has sparse individual pixels forming a kind of noisy image with gaps between them.

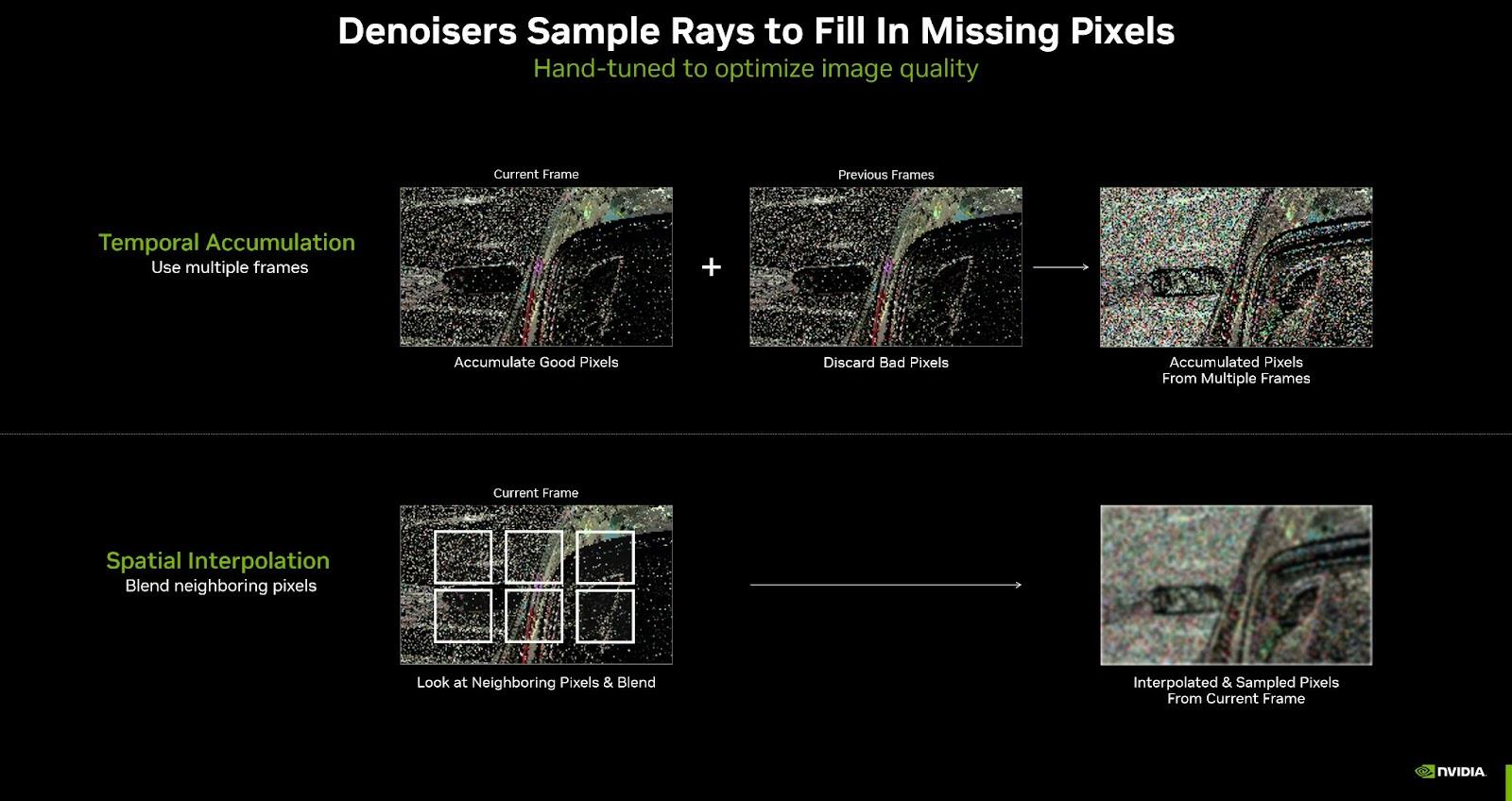

The game implementations of raytracing in DXR (DirectX Ray Tracing) has been using denoisers (visual noise removal filters) since the beginning to smooth, fill and suppress those discontinuities to make the raw sparse image look normal for use in the game. These denoisers in games today are typically solved by various traditional algorithms, often using multiple algorithms combined. They can use both temporal smoothing techniques (where they combine information from multiple consecutive frames) and spatial smoothing techniques (i.e. smoothing within the single frame bitmap only). These should be similar to algorithms you are familiar with if you have experience with video filtering, but here they run on GPU shaders. However, similar filters can also blur detail or cause artifacts, just like video processing.

Ray Reconstruction using AI

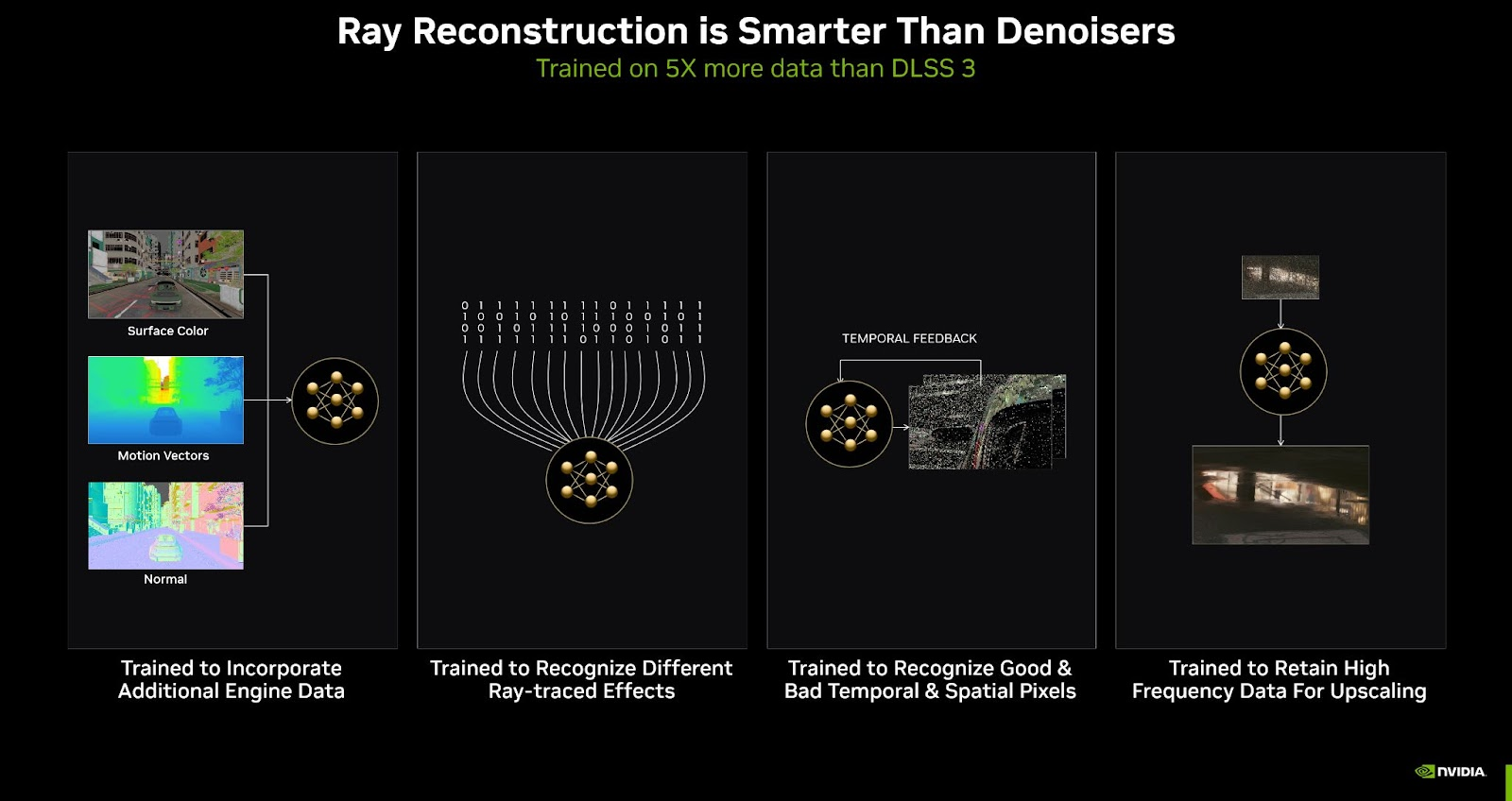

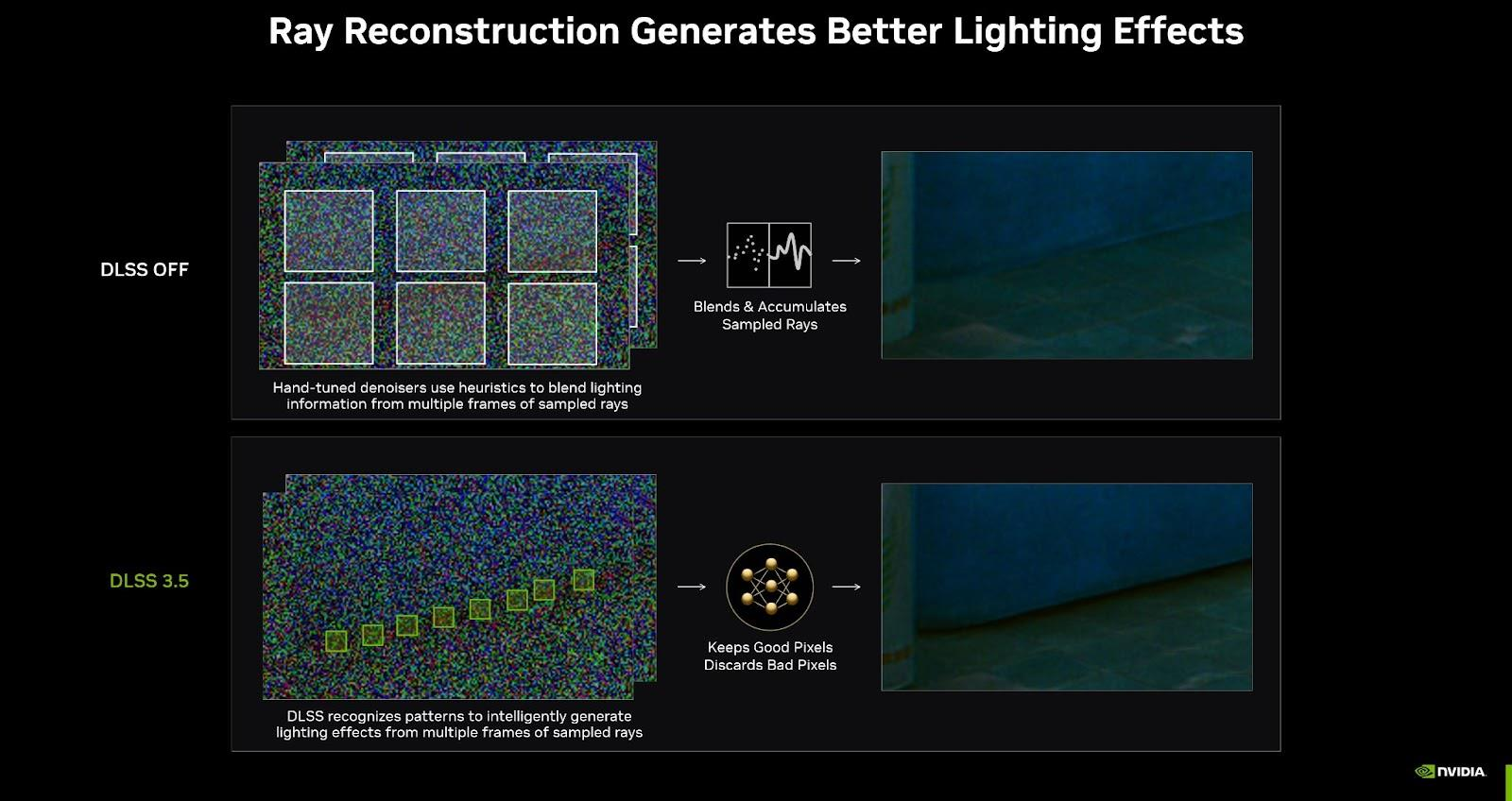

The task of denoising is one of those for which the principles of pre-trained neural networks work well, and the Ray Reconstruction technology in DLSS 3.5 provides a special neural network to be used at this point in the rendering of the game, replacing the work of the usual conventional denoisers. The neural network is trained on a corpus of clean and noisy images for this purpose, similar to the way it is trained on the original and downscaled images for upscaling. Once trained, it should perform better than traditional denoisers, according to Nvidia.

Therefore, the usage of this AI within DLSS 3.5 may improve image quality, as the denoiser and its temporal function will be able to preserve some extra detail while preventing some artifacts (temporal ghosting or detail blurring) that current denoisers cause or are unable to prevent.

This does not necessarily mean that this noise removal will be without its own image artifacts or not prone to loss of detail at all, but there should be less of these than with conventional denoisers, while more detail should be preserved. Ideally, the use of this AI should bring some benefits similar to how DLSS 2.x improved upscaling to this stage of raytracing as well.

This AI noise-removal filter is very close to DLSS 2.x in how it operates – it performs both noise removal and essentially upscaling on the raytracing lighting image data. It uses various data from the game engine to enhance the input rendered frames, but in this case it’s not the final scene frames, but the raytracing lighting image data. The filter is temporal and uses motion vectors – it puts together several consecutive past frames to filter temporally, and by doing so it can also restore some detail that would otherwise be lost in the low resolution used in raytracing effects.

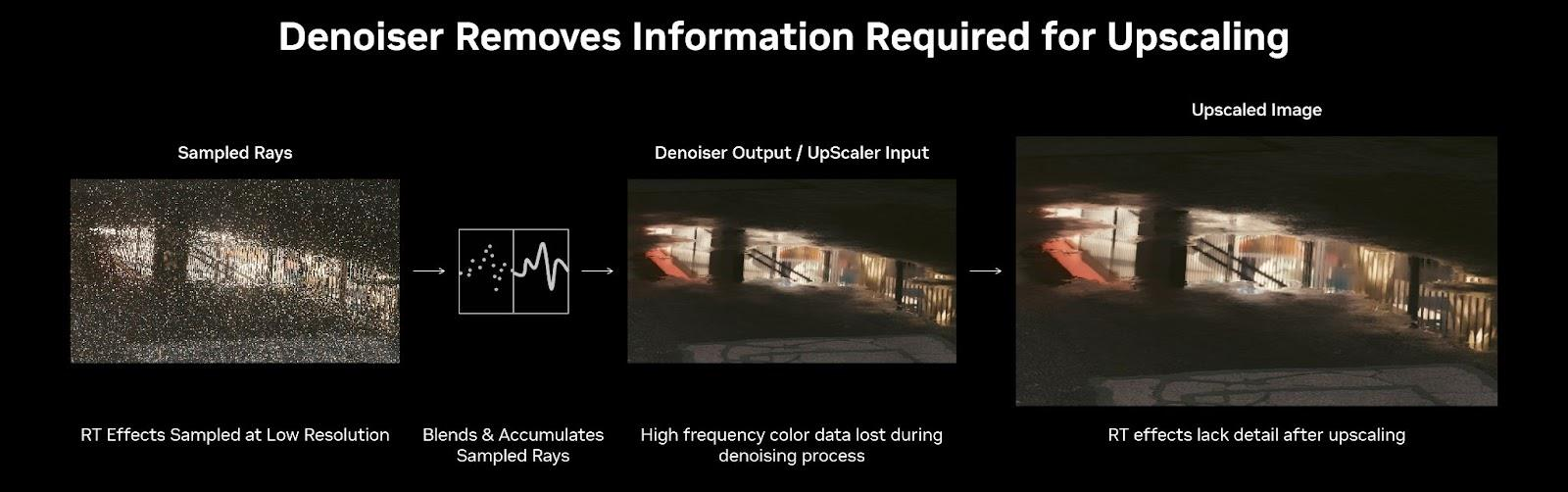

Integration with DLSS 2.x

An important element for this promised improvement in visual quality is that this de-noising AI is linked to the upscaling AI used for DLSS 2.x, it should be a single model that performs both functions. This should be helpful in that the AI has more information to do its work. While with separate operation, it would be the case that DLSS 2.x ends up doing a worse job of upscaling the lighting data because the denoiser before it deleted some details and information from the input, an AI integrated in this way will still be able to use such information as input to its decision making. In particular, it’s the so-called “high-frequency information”, which noise-removing filters (which typically function as a low-pass filter) tend to remove, but for upscaling and temporal reconstruction it can be useful to reconstruct and stabilize details at higher resolution and quality.

Not only a qualitative improvement, but also potentially a plus for performance

The primary benefit should therefore be to improve raytracing effects and the parts of the image that are generated by them, such as lighting, reflections and flares. And it should be improved when ray tracing is used in conjunction with DLSS upscaling (super resolution). However, there can sometimes be a performance improvement because the denoising calculations are offloaded to an AI model running on tensor cores, freeing up GPU shaders whose performance is consumed by traditional denoisers. It seems that, at least in some games, memory requirements may also be reduced somewhat.

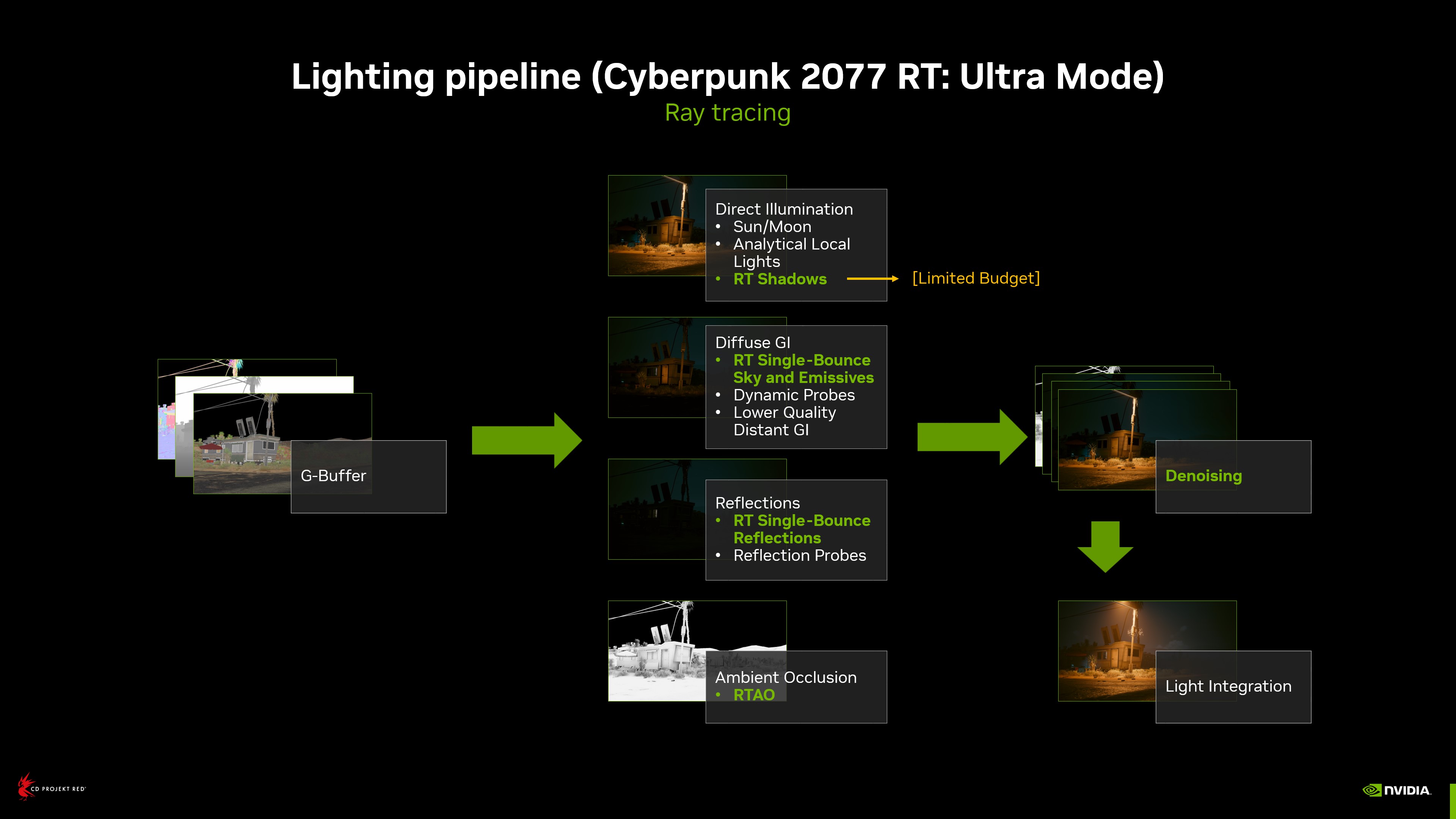

What’s Overdrive Mode (Cyberpunk 2077)? A full ray tracing game

While raytracing graphics effects have been common in games since 2018, previous implementations have been limited. Due to the high hardware requirements, it is not or was not possible to render an entire scene using this method (as, for example, the Cinebech test does when drawing a benchmark scene, or similar rendering tools when creating professional visualizations or even entire movie scenes). Raytracing games have so far been rendering via ray tracing only partially – for some objects (reflective surfaces, mirrors), for shadows or lighting effects. It was a hybrid approach adding raytracing effects to a “rasterized” scene.

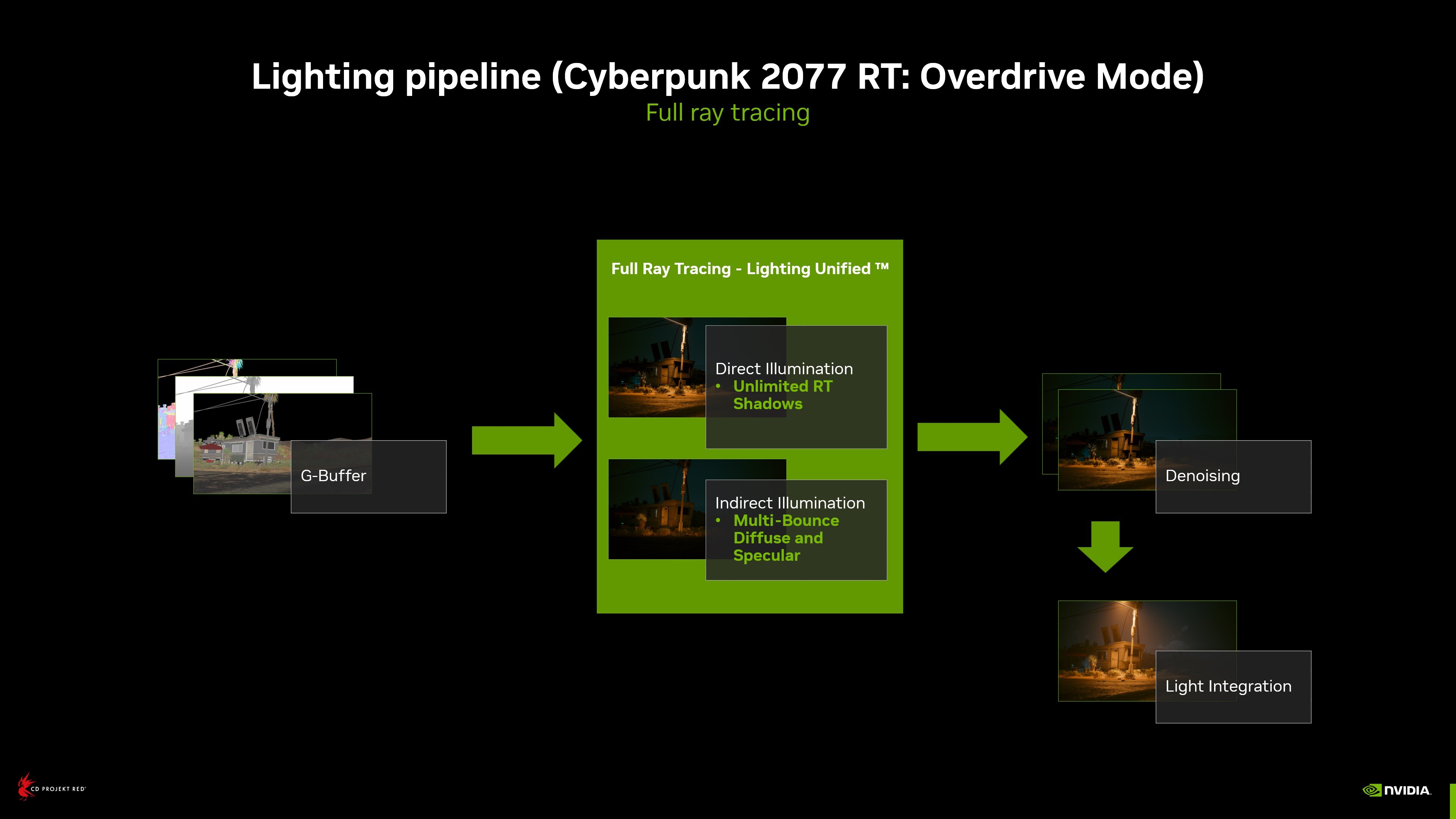

However, in the future, games based on complete raytracing of the scene should exist. With so-called Path Tracing, the game should draw the complete scene (i.e. all pixels) through the raytracing method, so that the entire scene should have realistic lighting effects that take into account all light sources in the scene, faithful reflections of light and objects, and physically correct shadows.

The first preview of such a game (as far as big AAA titles go) would be Cyberpunk 2077, which premiered as a game with conventional hybrid rendering with added raytracing effects. This year, however, it received a patch adding a so-called Ray Tracing Overdrive Mode, which is supposed to render the game with full ray tracing.

In Overdrive Mode, virtually every light source in the scene (including car lights, neon lights, lamps) and its impact on the scene should be ray traced, with realistic effects and shadows. Ray tracing is also used for global illumination and indirect illumination from various light reflections.

However, Ray Tracing Overdrive Mode is extremely demanding on the hardware and processing of these graphics. The game has been heavily optimized and uses Nvidia-optimized raytracing denoisers, which have a similar function to upscaling (discussed in the section on Ray Reconstruction).

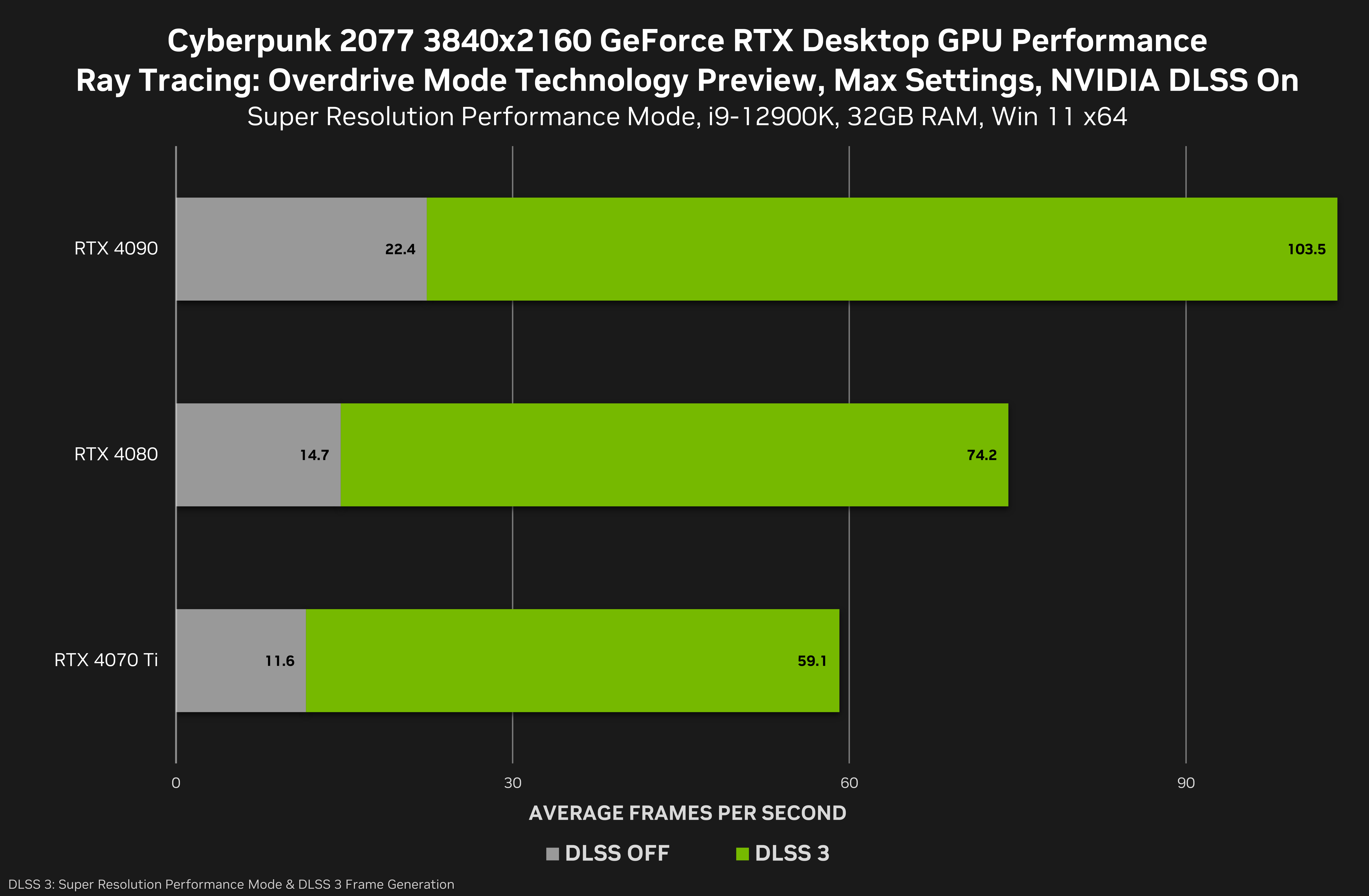

However, on today’s GPUs, playing such a game is more or less only possible thanks to technologies like upscaling/super resolution (DLSS 2.x) and frame generation (DLSS 3/3.5), which can produce higher resolution and smoother frame rate output from the fairly low resolution and frame rate you can get out of a GPU today.

- Contents

- Frame Generation. What is it about?

- Nvidia Reflex: Principle of operation

- Ray Reconstruction: The new feature brought by DLSS 3.5

- Testing methodology

- Test results – Ray Tracing: Overdrive Mode

- Test results – Ray Tracing: Ultra

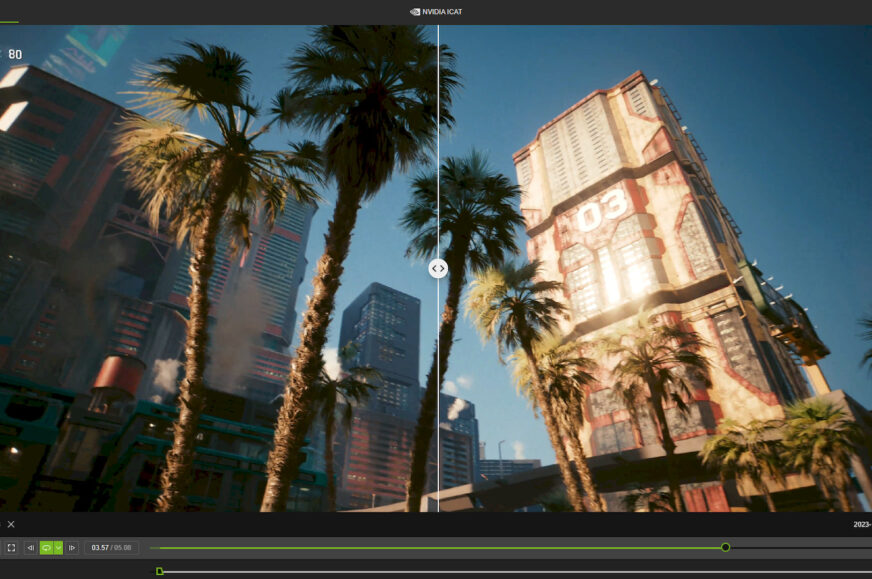

- Comparison of image quality and Nvidia ICAT

- Conclusion